Forecasting solar power output to assist with the integration of solar energy into the power grid

Received: 15-Mar-2023, Manuscript No. puljpam-23-6321; Editor assigned: 17-Mar-2023, Pre QC No. puljpam-23-6321 (PQ); Accepted Date: Mar 27, 2023; Reviewed: 20-Mar-2023 QC No. puljpam-23-6321 (Q); Revised: 22-Mar-2023, Manuscript No. puljpam-23-6321 (R); Published: 31-Mar-2023, DOI: 10.37532/2752-8081.23.7(2).116-124

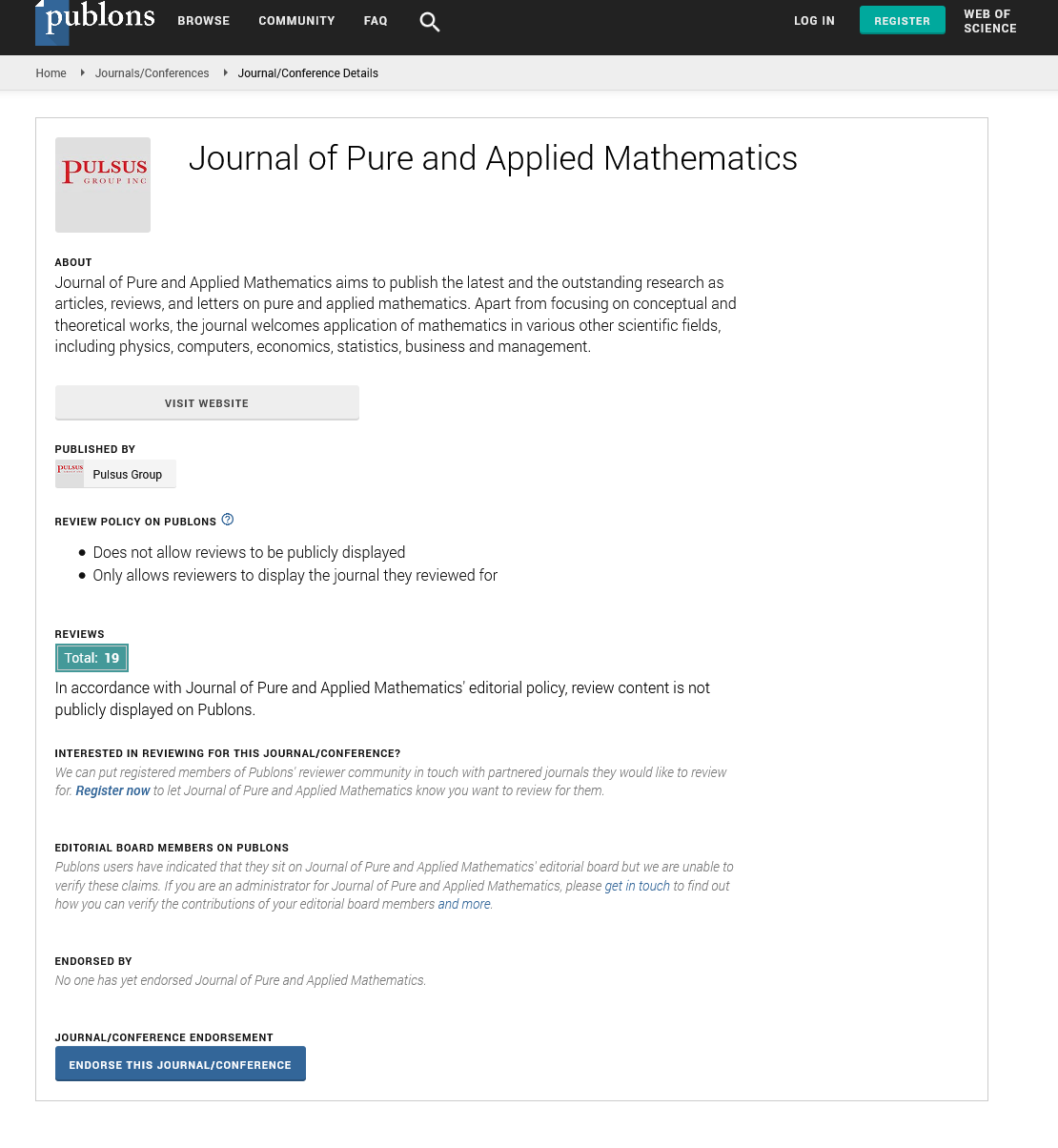

Citation: Garg A. Forecasting solar power output to assist with the integration of solar energy into the power grid. J Pure Appl Math. 2023; 7(2):116-124

This open-access article is distributed under the terms of the Creative Commons Attribution Non-Commercial License (CC BY-NC) (http://creativecommons.org/licenses/by-nc/4.0/), which permits reuse, distribution and reproduction of the article, provided that the original work is properly cited and the reuse is restricted to noncommercial purposes. For commercial reuse, contact reprints@pulsus.com

Abstract

This paper discusses the integration of solar energy into the power grid by forecasting solar power ahead of time using a time series forecasting problem formulation. The aim is to help reduce the impact of fossil fuels on the environment. The two main objectives are namely to forecast PV power one time step ahead and to forecast PV power multiple time steps ahead. Data has been taken from the Hong Kong University of Science and Technology. This paper compares machine learning techniques such as CNN LSTM, RNN LSTM, Dense Neural Network, Convolutional Neural Network, to find the best predictor of PV output. The tuning of hyper parameters such as the learning rate, regularization parameter, activation function, number of iterations, etc. is also an essential part of the discussion. The Long Short Term Memory (LSTM) and dense feed forward layers, as well as convolutional layers help to introduce new important features from the original features. This paper gives a summary of the future works to be done to assist for additional research and improved results.

Methodology

Single step model

The single step model involves taking inputs of a certain window length, and producing a single output from these inputs. This is then compared against the actual output/label, and the MAE is found, which is fed back to the model. The figure below gives a visual representation of the single-step forecasting model (Figure 5).

Multi step model

The multi output model involves taking inputs of a certain window length, and producing a multiple timestep ahead output from these inputs. These outputs are compared against the actual outputs, and the MAE is found, which is fed back to the model. The figure below gives a visual rep- resentation of multi-step forecasting model (Figure 6).

Performance metric

The metric being used is the Mean Absolute Error (MAE), as it is the most appropriate metric, after the parameters have been normalized. Since the features are normalized from 0-1, MAE depicts the mean absolute % age error in the prediction, and is easily interpretable for evaluating performance every time a hyperparameter has been changed.

References

- Akhter MN, Mekhilef S, Mokhlis H, et al. A hybrid deep learning method for an hour ahead power output forecasting of three different photovoltaic systems. Appl Energy. 2022; 307 (1).

- Isaksson E, Karpe Conde M. Solar power forecasting with machine learning techniques. 2018.

[Google Scholar] [Crossref]

- Alam AM, Razee IA, Zunaed M. Solar PV power forecasting using traditional methods and machine learning techniques. Kans Power Energy Conf. 2021; 1-5.

- Gensler A, Henze J, Sick B, et al. Deep Learning for solar power forecasting—An approach using AutoEncoder and LSTM Neural Networks. Int conf syst man cybern. 2016.

[Google Scholar] [Crossref]

- Pedregal DJ, Trapero JR. Adjusted combination of moving averages: A forecasting system for medium-term solar irradiance. Appl Energy. 2021.

- Zheng J, Zhang H, Dai Y, et al. Time series prediction for output of multi-region solar power plants. Appl Energy. 2020. .

- Feng C, Zhang J, Zhang W,et al. Convolutional neural networks for intra-hour solar forecasting based on sky image sequences. Appl Energy. 2022; 310 (3).

- Theocharides S, Makrides G, Livera A, et al. Day-ahead photovoltaic power production forecasting methodology based on machine learning and statistical post-processing. Appl Energy. 2020.

- Elfeky MG, Aref WG, Elmagarmid AK. Periodicity detection in time series databases. Trans Knowl Data Eng. 2005; 17(7):875-87.

- Luo X, Zhang D, Zhu X. Deep learning based forecasting of photovoltaic power generation by incorporating domain knowledge. Energy. 2021.

- Wang Z, Koprinska I, Rana M. Solar power forecasting using pattern sequences. Int Conf Artif Neural Netw.2017;486-494.

Acknowledgements

I thank Professor Zhongming Lu (Hong Kong University of Science and Technology) for guiding me through this project and providing helpful insights, as well as helping me access weather features data from the HKUST Supersite.

I thank Li Mengru (Hong Kong University of Science and Technology) for helping to provide me with data for the PV output.

I thank Professor Wei Shyy (President of Hong Kong University of Science and Technology) for giving me an opportunity to work with one of the esteemed professors in the university.

I thank Professor Hong K. Lo, JP (Chair Professor of Department of Civil and Environmental Engineering at HKUST) for introducing me to Professor Zhongming Lu.

Conclusion and Scope for Future Work

From the results above, it can be concluded that the best technique for solar PV forecasting of one timestep ahead is the CNN LSTM method, and best technique for solar PV forecasting of multiple timesteps ahead is the AR LSTM method. For both techniques, the common layer in the model is the LSTM layer, which helps to remove unimportant features and gives important features higher weights. For the single timestep ahead forecasting, the convolutional layer helps to split the original features into multiple different features, which is fed into the LSTM layer, to find the most important features. For the multiple timestep ahead forecasting, the autoregression of the AR LSTM model helps to better predict the weather data for multiple timesteps ahead, and this in turn makes it easier to predict the solar PV output data.

To give the model a better sense of seasonality, the dataset for training, test and validation set could be split differently. Instead of choosing the first 75 % to be training data, the first 75% of data for each month could instead be chosen to be training data, the next 15 % of each month to be validation data and the last 10 % of each month to be test data. In this manner, the algorithm would be able to distinguish between months and give the seasonality for different months, which would make the model better.

Another method which could be used for future improvements. This method involves the clustering of days of data into certain categories, and then performing pairwise predictions on this data using k-nearest neighbors and neural networks. This is useful if weather does not change drastically throughout a day, as it classifies that a day as sunny or rainy and then calculates the PV output given this classification.

Discussion of Results

For the single time step ahead forecasting, the results can be seen in Figure 15 and Figure 16. As expected the CNN LSTM model had the best results with a 6% error. This is due to the convolutional layer and the LSTM layer, which help to adapt the given features into different features. Given such a vast amount of features, the dense network was able to assign weights to the original features via backward propagation. The RNN LSTM model also performed quite well, as the LSTM layer was able to help with giving the RNN layer certain weights to help the model remember or forget features depending on their importance. Additionally, the problem of vanishing gradients was not present, hence the model was able to backward propagate to give all the weights.

Multi step ahead forecasting

In this model, the errors were found to be much higher than in the single step ahead forecasting, and the baseline algorithm gave significantly higher errors, of around 0.38 or 0.39 in comparison to the other techniques which gave errors of close to 0.11 or 0.12. This is because, the autoregression is more useful in multiple step ahead forecasting, whereas it is not as useful in the single step ahead forecasting. Therefore, the AR LSTM model has done the best on this data, whereas the other models have not done as well, (Figures 17- 22).

Linear weights

AR LSTM

RNN LSTM

CNN

Dense

Linear

Comparison

As can be seen from the figures below (Figures 23, 24), the results for the AR LSTM model are the best, with an error of close to 11% for each of the training, test and validation set. The baseline models have a very high error, as they are unable to perform autoregression, and the data changes drastically for multiple hours ahead forecasting andhence the models that do autoregression have a marked improvement. The RNN LSTM model was also very successful, due to the fact that it used the LSTM layer as well, which helped to reduce the unimportant features, but include more important features. For the RNN in particular, it also got rid of the vanishing gradient problem, hence this gave results of close to 12 % error. The errors of the multiple step forecasting, however, are very significantly higher than that of the single step ahead forecasting, due to the fact that the errors increase incrementally for each timestep the model is forecasting.

Results table

Comparison

As can be seen from the figures below, the results for the CNN LSTM model are the best, with an error of less than 8 % for the training set and validation set, (Figure 15,16). Additionally, the RNN LSTM model is also quite successful, giving very similar errors to the CNN LSTM model. This implies that the LSTM layer is highly beneficial in the prediction, and helps with getting the most important features.

Results Table

Results

Single step ahead model

Linear weights

Linear

CNN LSTM

RNN LSTM

CNN

Hyperparameters

In this section, the paper discusses the different hyperparameters which need to be tuned and the values they have.

Regularization parameter (λ) and number of epochs

The number of epochs or iterations the model had to run on the training set is another important hyperparameter. This was highly important to get the optimal weights for the model to ensure that neither underfitting nor overfitting was taking place. In addition to this, another hyperparameter which was used to prevent overfitting was the regularization parameter; λ. This added weight penalized overfitting, but it had to be tuned in order for it not to be too low which would have caused overfitting, but also for it not to be too high, which would have caused underfitting. After testing 4 times, the optimal value for λ turned out to be 1e-2 for the CNN LSTM model and 1e-1 for all the other machine learning models.

Convolution window

Another very important hyperparameter for the convolutional neural network was the window width. This would help determine how many previous timesteps would be used to predict the next time step for the various different models. For example, the convolutional window width, used for the CNN and CNN LSTM was 7 timesteps, as it gave results with lower MAE than any of the window sizes greater or smaller than 7.

Learning Rate(α)

Another hyperparameter which required tuning was the learning rate α. It is necessary to alter the learning rate to an optimum to ensure that neither overshooting nor slow gradient descent was taking place. After 5 iterations of the program, it could be seen that the optimal solution for the learning rate was 8e-4.

Activation function

The activation function was another very important hyperparameter to tune. The method used to find the optimal activation function was to take the average of three runs for each of the three activation functions: tanh, relu and sigmoid and compare the mean absolute %age error for each of the functions. From the results of each of three runs, it could clearly be seen that the optimal function was the tanh activation function. This was a very important hyperparameter, as this caused a significant increase in the model performance.

Optimizer adam

The optimizer being used is the Adam (Adaptive Moment) optimizer, as it is computationally faster than other optimizers, and requires fewer parameters for tuning than other optimizers, as it tunes the parameters by itself, (Figure 10-14).

Different Techniques Used

There are many different techniques that can be used for this time series forecasting problem.

Linear

This technique is a simple multiple linear regression, with the algorithm assigning weights to all weather parameters and predicting the PV output of the next timestep. This technique involves auto-regression of weather parameters and PV output, so that weights can be given to the weather parameters for the next timestep for the multiple timesteps ahead forecasting objective.

CNN

This technique involved the usage of 1 convolutional layer and 2 hidden dense layers with 64 units, as well as 1 output layer. The convolutional layer contained 32 filters and a 7x7 kernel, connected to a dense neural network with 2 hidden layers of 64 units each. The window size used was 7 timesteps, as this was the most suitable window size, as discussed in the section discussing hyperparameters. The convolutional layer is used to help split each of the data features to help the dense neural network easily predict each part and then give weights to the original inputs of the convolutional layer. The figure below shows an example of convolutional window (Figure 7).

Multi step dense

This technique was similar to the single step dense neural network, as it involved 2 dense layers consisting of 64 units each and one output layer, however the window-size was 3 timesteps. This meant that the previous 3 timesteps were being used to predict the next timestep for the single step output. This technique is used to check how important autoregression of weather parameters is to the model. With three timesteps of given input data, it gives the algorithm better knowledge of the dependence of the next timestep on the previous timestep (i.e autoregression).

CNN LSTM

This technique involved the usage of 1 convolutional layer, 1 LSTM layer, 1 dense layer and 1 output layer. The convolutional layer contains 32 filters and a 7x7 kernel, with the tanh activation function. The window size used was 7 timesteps, as this was the most suitable window size, as discussed in the section discussing hyperparameters. The LSTM layer con- tains 32 filters as well, whilst the dense neural network consisted of 1 hidden layer of 64 units. First the convolutional layer was used to extract deeper features from the original weather data, and then the LSTM layer, as seen in (Figure 8) is used to help with finding long term time series features. Thisgives the dense layer more features, which it was able to use to goodeffect. Then, backward propagation occurred, which helps to find theweights of the original inputs. With multiple timesteps aheadforecasting, the CNN LSTM model also has autoregression takingplace, such that the results of the first output, as well as the previous6 given inputs are used to predict the results of the next output,(Figure 8).

RNN LSTM

This techniques involves the usage of one LSTM layer, to help serve as a building block for the other layers. The LSTM helps assign certain weights which helps the RNN let new in- formation in, forget information or assign it importance to the output. As this technique is used instead of a normal recurrent neural network, due to the issue of vanishing gradients [10-11]. The figure below gives a visual representation of the RNN model, (Figure 9).

Dense neural network

This technique involved using 3 dense layers consisting of 64 units each, and one output layer. The window size for this technique is only 1 timestep, implying that only the previous timestep is used to predict the next timestep. This technique is used as it is important to strike a comparison between the other neural network techniques and the conventional neural network.

AR LSTM

This technique involves using one LSTM layer, one RNN layer, one dense layer and one output layer. This technique helps with the autoregression of PV through time, and therefore this is used for the multiple time steps forecasting.

This model has a clear advantage to others, as it can be adjusted easily to give an output length of many timesteps ahead, and due to the autoregression, it has a higher accuracy rate. However, the downside is that this model can only be run well on multiple timesteps forecasting as it as an autoregressive model. For the prediction of one timestep ahead forecasting, it would give no different results to the usual dense network.

Baseline

The baseline model is used as a metric of comparison, as it shows the error of assuming that the next output is equal to the last PV output. If the baseline error is lower than the error of the other model, it is an indication that either the data is heavily skewed, or the model has been formulated incorrectly. In a multi-step model, an additional baseline technique is used, which repeats the pattern of the last n given inputs for the next n outputs, where n is the number of timesteps forecasted.

Keywords

Solar power forecasting; Machine learning; Renewable energy; Neural networks; Autoregression; Power integration

Data Preparation

In this section, the preparation stages of the data will be outlined

Removing anomalous data from weather set

Before merging the data from weather features, it was essential to remove the anomalous data from the dataset. For example, the data for certain hours for temperature happened to be -99999 degrees celsius. This indicated that an error had occurred, and the sensor was unable to give a reading for that hour, and such anomalous data was removed from the dataset. After that, the data was merged into a dataframe as shown below, with the outputs of 2 year of weather data, collected hourly.

Feature normalization

For techniques involving machine learning, it is essential to do feature normalization, before running the model. This is because certain features may have different data ranges, and assigning weights to these features will be meaningless. In- stead, if all features are normalized, then the weights can be compared against each other, and thus the importance of each feature can be compared. This is highly important for analysis of models and methods to increase performance of the model, as the data can be easily understood. Below shows a snapshot of the first 10 rows of the normalized dataframe (Figure 2).

Data transformation - day sin, day cos features

As discussed, it is very important to detect the periodicity and pattern of data, and hence creating features to detect this is highly important [9]. One key step required was to create a feature which captures the time of day in a numerical method, and gives a repeating pattern of values for each new day. Hence, each date and time was give a timestamp and then multiplied by 2π and divided by the duration of each day. The cosine and sine of these values were taken, to form a periodic curve for every 24 hours. A similar process could be used to find the seasonality of the year, by replacing the length of day with length of year.

Training set - test set - validation set split

In this data, the split of data has been done 75 % training, 15 % validation and 10 % test data. It is essential to ensure that the data is chosen in a time-series manner, such that the training set data has to be together, as the data being used is in chronological order (date and time wise). Hence, the algorithm being run is a time-series forecasting model. Due to the fact that this problem is a time-series formulation, the machine learning algorithm would not give proper weights if the order of the data were to be changed. Autoregression of the PV output would fail as the data would not be in a time- series order, if the data were split randomly. The weather and solar output of the previous timestep does impact the weather and solar output of the next timestep, due to the physical constraints on how quickly the weather can change.

Cleaning the PV output data

Data for the PV output for each day was available for every 5 minutes between 5:00 am to 8:00 pm. This is because at any time after 8:00 pm and before 5:00 am, the sun was not out and therefore the PV output data was not collected during these times. Accurate data was collated into a dataframe, as shown below.

Merging PV and weather data

Merging the PV and weather data was tricky as the PV data had been collected every 5 minutes whereas the weather data had been collected hourly. Therefore, when merging the PV and weather data, the largest common subset of both sets of times had to be chosen along with the label data (PV output) and the input features (weather data). As shown below, a sample of the dataframe can be seen with all the PV and weather data together in Figure 3.

Violin plot

After the normalization of data, a violin plot was created to show the distribution of data for each feature, and evaluate the split of data for the entire dataset. Figure 4 was used to check for large anomalies in the data, and if so, to under- stand the cause behind this anomalous data, and whether any modification would be required.

List of Features Used in Modelling

• Windspeed

• Azimuth

• Solar Irradiance

• Pressure

• Visibility

• Humidity

• Temperature

• PV output

• Day sin

• Day cos

Notations

LSTM = Long Short Term Memory

CNN = Convolutional Neural Network

RNN = Recurrent Neural Network

ARIMA = Auto-Regressive Integrated Moving Average

PV = Photo-Voltaic

ANN = Artificial Neural Network

AR LSTM = Auto-Regressive Long Short Term Memor

α = learning rate

λ = regularization parameter

tanh = Hypberbolic Tangent Activation Function

relu = Rectified Linear Unit

Adam = Adaptive Moment Optimizer

Epochs = Number of Iterations

ConvWindow = Convolutional Window Width

MAE = Mean Absolute Error

val = cross-validation set

d = length of day in seconds

t = timestamp in seconds

x = data feature

x = normalized feature

Data Collection

There are many weather variables which may affect the solar power production of a certain hour. Some of these factors include solar irradiance, temperature, windspeed, humidity, time of day and time of year. The data from the past 2 years of hourly readings for these factors was collected from the Hong Kong University of Science and Technology (HKUST) Supersite, and PV data was collected from the HKUST Solar site. The site is located in the new territories region of Hong Kong, and collects data using various different types of sensors including pressure sensors, temperature sensors and anemometer to measure windspeed.

Objectives

This paper discusses 2 main objectives:

• To predict PV output one timestep ahead

• To predict PV output multiple timesteps (14 hours) ahead As discussed, these objectives are both of importance to help with planning, and the integration of renewable energy in the form of solar energy into the power grid.

Related Research

techniques were used to predict solar power output one hour ahead given hourly data. In techniques such as Artificial Neural Networks (ANN), Convolutional Neural Networks (CNN), multiple linear regression and support vector machines were used for prediction [1]. In mathematical models such as ARIMA (auto regressive integrated moving average) as well as machine learning algorithms were used, due to their ability to factor in seasonality of winter vs summer and the time of day [2-3]. Other than the ones stated above, the most common techniques include Recurrent Neural Network (RNN), dense neural network and the combination of RNN and CNN with LSTM (long short term memory). From CNN LSTM worked best on the data provided, with a mean absolute error of 5% [3]. Other deep learning techniques, such as Auto-LSTM and MLP (multiple layer perceptron) have been used for forecasting solar PV output, as in [4].

For multiple timestep forecasting, techniques from, as well as the similar techniques from single timestep forecasting are very common. In particular, the hybrid Convolutional LSTM model is the most common, as the convolutional layer and LSTM layer work well together, giving good approximations [5].

In the forecasting of PV output, the most common weather features chosen are solar irradiance, temperature, humidity, visibility, pressure dew and windspeed, as can be seen in [6]. In particular the solar irradiance feature is of great importance, as shown in [7]. Another additional feature, as discussed in [8]. Which could be useful is the azimuth. This is because it indicates the elevation angle of the Sun above the solar panel system, and theoretically should contribute a weight to the PV solar output.

Introduction

Due to the emergence of rising global temperatures, and the dire consequences of global warming, it is of utmost importance to work on reducing greenhouse gas emissions. The largest source of these are fossil fuels, therefore it is important to find alternative energy sources, such as renewable energy sources to reduce the impact of global warming. Finding clean energy and integrating it into the energy grid is a huge step to- wards solving the UN sustainable development goal number 7, as shown in Figure 1. However, it has been challenging for scientists and policy-makers to harness these renewable energy sources, especially solar energy, to the fullest extent due to the unreliability of such energy sources. Therefore to aid with the integration of renewable energy to the energy grid, it is important to forecast the output of the energy sources. Since solar energy is the most prominent source of renewable energy, with long-lasting output ahead of time, to make it easier to integrate solar energy into the grid, and reduce the reliance on fossil fuels. PV cells convert light energy to electric energy using materials that show signs of photovoltaic effect. The main issue is that solar panels, which make up a PV system only work well if the sunlight is directly on to the panel, and energy is lost when tracking system is not established, hence building a PV forecasting system is of utmost importance. PV outputs vary throughout the day, given the varying solar irradiation and other weather temperatures, therefore, to integrate this resource into the energy grid, whilst maintaining consumer demands, forecasting of power output can help to estimate the amount of load that solar power can assist with. During times of high solar power production, batteries can be used to store the excess energy for times when the sun is not out (i.e during the night, or on a rainy day).