The SP-multiple-alignment concept as a generalization of six other variants of information compression via the matching and unification of patterns

Received: 27-Jun-2023, Manuscript No. puljpam-23-6561; Editor assigned: 29-Jun-2023, Pre QC No. puljpam-23-6561 (PQ); Accepted Date: Jul 28, 2023; Reviewed: 03-Jul-2023 QC No. puljpam-23-6561 (Q); Revised: 05-Jul-2023, Manuscript No. puljpam-23-6561 (R); Published: 31-Jul-2023, DOI: 10.37532/2752-8081.23.7(4).247-260.

Citation: Wolff JG. The SP-multiple-alignment concept as a generalization of six other variants of information compression via the matching and unification of patterns. J Pure Appl Math. 2023; 7(4):247-260.

This open-access article is distributed under the terms of the Creative Commons Attribution Non-Commercial License (CC BY-NC) (http://creativecommons.org/licenses/by-nc/4.0/), which permits reuse, distribution and reproduction of the article, provided that the original work is properly cited and the reuse is restricted to noncommercial purposes. For commercial reuse, contact reprints@pulsus.com

Abstract

This paper is about the SP-Multiple-Alignment (SPMA) concept which, as described in other publications, is largely responsible for the versatility of the SP Theory of Intelligence and its realisation in the SP Computer Model (SPCM) in modelling diverse aspects of intelligence, diverse kinds of intelligence-related knowledge, their seamless integration in any combination, and some other potential benefits and applications. The paper aims, firstly, to describe how the SPMA concept is founded on ‘Information Compression (IC) via the Matching and Unification of Patterns’ (ICMUP), and then, secondly, to show with examples how the SPMA concept is a generalisation of six other variants of ICMUP—a generalisation that appears to be largely responsible for the intelligence-related and other strengths of the SPMA concept. Each of those six variants is described in a separate subsection, and in each case, there is a demonstration of how that variant may be modelled via the SPMA concept. To provide some context for this paper, those six variants are also the basis of arguments, in another paper, that much of mathematics, perhaps all of it, may be understood as a set of techniques for the compression of information, and their application; and there are potential benefits from the creation of a New Mathematics as an amalgamation of ‘IC as a foundation for intelligence’ and ‘IC as a foundation for mathematics’.

Key Words

Computer model; Theory of intelligence; Information compression; Foundation for mathematics

Introduction(Section 1)

This paper is about the SP-Multiple-Alignment (SPMA) concept which, as described in other publications, is largely responsible for the versatility of the SP-Theory of Intelligence (SPTI) and its realisation in the SP-Computer Model (SPCM).

More specifically, the paper describes how the SPMA concept is founded on ‘Information Compression (IC) via the Matching and Unification of Patterns’ (ICMUP), and how it is a generalisation of six other variants of ICMUP—a generalisation that appears to be largely responsible for the intelligence-related strengths of the SPMA concept, and strengths in other areas of application.

This paper and other publications relating to the SPTI and the SPCM, may be characterised as ‘IC as a foundation for intelligence’.

A Bare-Bones Introduction to the SPTI (Section 2)

The SPTI, including the SPMA concept, is described quite fully and even more fully in [1, 2].

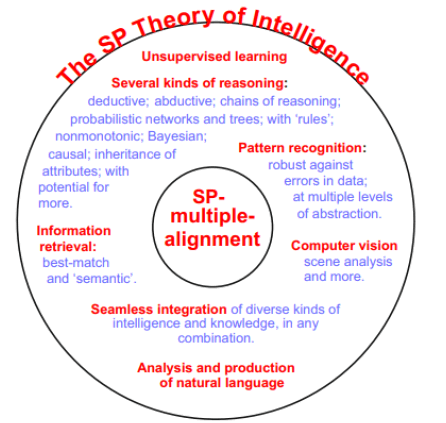

Those two sources describe how the SPMA concept is a key to the versatility of the SPTI in modelling diverse aspects of intelligence including several kinds of probabilistic reasoning, it is the key to the representation and processing of diverse kinds of intelligence-related knowledge, and it facilitates the seamless integration of diverse aspects of intelligence and diverse kinds of intelligence-related knowledge, in any combination. That kind of seamless integration appears to be essential in any system that aspires to the fluidity, versatility, and adaptability of human intelligence.

The SPTI has been developed via a a top-down, breadth-first research strategy with wide scope [3], seeking to simplify and integrate observations and concepts across Artificial Intelligence (AI), mainstream computing, mathematics, and human learning, perception, and cognition.

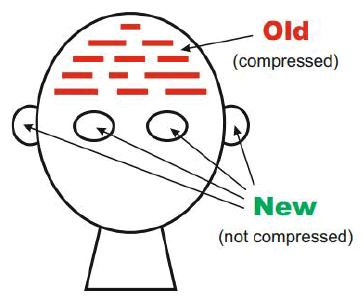

In broad terms, the SPTI is a brain-like system that takes in new information through its senses and stores some or all of it as Old information that is compressed, as shown schematically in Figure 1.

Figure 1: Schematic representation of the SPTI from an ‘input’ perspective. Reproduced, with permission from Figure 1 in [1].

In the SPTI, all kinds of knowledge are represented with SP-patterns, where each such SP-pattern is an array, in one or two dimensions, of atomic SP-symbols. An SP-symbol is simply a ‘mark’ that can be matched with any other SP-symbol to determine whether it is the same or different.

At present, the SPCM works only with one-dimensional SP-patterns but it is envisaged that it will be generalised to work with two-dimensional SP-patterns as well—a development which should facilitate the representation of pictures and diagrams. It is anticipated that the provision of two-dimensional SP-patterns would also facilitate the creation of 3D structures—as described in [4]. Two-dimensional SP-patterns may also serve to represent parallel processing as described in [5].

Strengths of the SPTI in modelling aspects of intelligence and other potential benefits and applications (Section 2.1)

The strengths of the SPTI, which are largely strengths of the SPMA concept, are summarised in Appendix A.

SP-Neural (Section 2.2)

The SPTI has been developed primarily in terms of abstract concepts such as the SPMA concept. However, a version of the SPTI called SP-Neural has also been described in outline, expressed in terms of neurons and their inter-connections and inter-communications. Current thinking in that area is described in [2] and more recently in [6].

It appears that, within SP-Neural, the SP concepts of SP-pattern and SP-Multiple-Alignment can be expressed in terms of neurons and their interconnections. The main challenge is how the processes of building SP-Multiple-Alignments, and of unsupervised learning, can be expressed in terms of neural processes.

Future developments (Section 2.3)

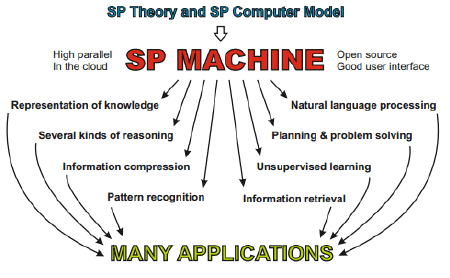

In view of the potential of the SPTI in diverse areas (Appendix A.1), the SPCM appears to hold promise as the foundation for the development of an SP Machine, described in [7], and illustrated schematically in Figure 2.

Figure 2: Schematic representation of the development and application of the SP Machine. Reproduced from Figure 2 in [1].

It is envisaged that the SP Machine will feature high levels of parallel processing and a good user interface. It may serve as a vehicle for further development of the SPTI by researchers anywhere. Eventually, it should become a system with industrial strength that may be applied to the solution of many problems in science, government, commerce, industry, and in non-profit endeavours.

The name ‘SP’ (Section 2.4)

Since people often ask, the name ‘SP’ originated like this:

• The SPTI aims to simplify and integrate observations andconcepts across a broad canvass (Section 2), which meansapplying IC to those observations and concepts;

• IC is a central feature of the structure and workings of theSPTI;

• And IC may be seen as a process that increases the Simplicityof a body of information, I, whilst retaining as much aspossible of the descriptive and explanatory Power of I.

Nevertheless, it is intended that ‘SP’ should be treated as a name, without any need to expand the letters in the name, as with such names as ‘IBM’ or ‘BBC’.

The SP-Multiple-Alignment Concept (Section 3)

Although the SPMA concept is part of the SPTI, it is given a section on its own because of its importance in this paper. The following subsections describe the origin and development of the SPMA concept, its organisation and workings, and its strengths in intelligence-related and other applications.

Multiple sequence alignments as inspiration for the SPMA concept (Section 3.1)

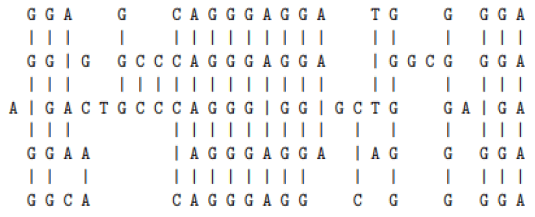

The SPMA concept has been borrowed and adapted from the concept of ‘multiple sequence alignment’ in bioinformatics, illustrated in Figure 3. Here, there are five DNA sequences which have been arranged alongside each other, and then, by judicious ‘stretching’ of one or more of the sequences in a computer, symbols that match each other across two or more sequences have been brought into line.

A ‘good’ multiple sequence alignment, like the one shown, is one with a relatively large number of matching symbols from row to row.

Because of the computational complexity of the problem of finding one or more ‘good’ multiple sequence alignments is normally so large, it is normally necessary to use heuristic techniques, trading accuracy for speed. This means conducting the search for good structures in stages, discarding all but the best alignments at the end of each stage. With these kinds of methods, reasonably good results may be achieved within a reasonable time, but normally they cannot guarantee that the best possible result has been found.

Development of the SP-Multiple-Alignment concept (Section 3.2)

Although the SPMA concept (illustrated in Figure 4, below) is recognisably similar to the concept of multiple sequence alignment, developing the SPMA concept, and exploring its range of potential applications, was a major undertaking over a period of several years, with the development of hundreds of versions of the SPMA concept, each one within its own version of the SPCM.

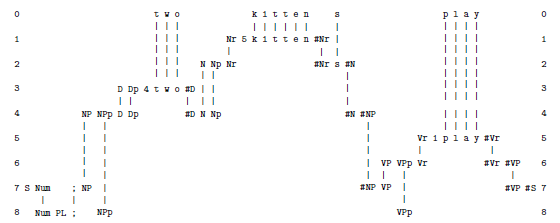

• The organisation of SPMAs and how they are created (Section 3.3) Figure 4 shows an example of an SPMA, superficially similar to the one in Figure 3, except that:

• Each row of the SPMA concept contains one SP-pattern; The SP-pattern in row 0 is new information, meaning information recently received from the system’s environment;

• Each of the remaining SP-patterns, one per row, is an Old SPpattern, drawn from a relatively large pool of Old SP-patterns. Unless they are supplied by the user, the Old SP-patterns are derived via unsupervised learning [1,2] from previously received new information;

• Each SPMA is scored in terms of how well the New SPpattern may be encoded economically in terms of the Old SPpatterns as described in [1, 2].

In this example, the New SP-pattern (in row 0) is a sentence and each of the remaining SP-patterns represents a grammatical category, where ‘grammatical categories’ include words. The overall effect of the SPMA concept in this example is the parsing of a sentence (‘t w o k i t t e n s p l a y’) into its grammatical parts and sub-parts.

As with multiple sequence alignments, it is almost always necessary to use heuristic methods to achieve useful results without undue computational demands. The use of heuristic methods helps to ensure that computational complexities in the SPTI are within reasonable bounds [2].

Each SPMA is built up progressively, starting with a process of finding good alignments between pairs of SP-patterns. At the end of each stage, SPMAs that score well in terms of IC are retained and the rest are discarded. There is more detail in [1,2].

In the SPCM, the size of the memory available for searching may be varied, which means in effect that the scope for backtracking can be varied. When the scope for backtracking is increased, the chance of the program getting stuck on a ‘local peak’ (or ‘local minimum’) in the search space is reduced.

Contrary to the impression that may be given by Figure 4, the SPMA concept, as noted earlier, is very versatile and is largely responsible for the strengths of the SPTI, as summarised in the next subsection and Appendix A.

Strengths of the SPMA concept (Section 3.4)

As noted above, the intelligence-related strengths of the SPMA concept are largely responsible for corresponding strengths of the SPTI, described in Appendix A.1. The SPMA concept also contributes to the SPTI in other kinds of benefits and applications (Appendix A.4), and it has the clear potential to solve 20 significant problems in AI research (Appendix A.5).

Bearing in mind that it is just as bad to underplay the strengths of a system as it is to oversell its strengths, it seems reasonable to say that the SPMA concept may prove to be as significant for an understanding of ‘intelligence’ as is DNA for biological sciences. It may prove to be the ‘double helix’ of intelligence.

IC Via ICMUP (Section 4)

As described in Section 6.1, ICMUP simply means finding two or more patterns that match each other and then merging or ‘unifying’ them to make one.

There are three details that can make things a little more complicated:

• Patterns that match each other need not be coherent groupingsof SP-symbols. For example, the SPCM can and often doeswork with partial matches between such SP-patterns as ‘t h r ow m e t h e b a l l’ and ‘t h r o w d a d d y t h e b a l l’. Another example may be seen in Figure 4 where the New SP-pattern ‘t w ok i t t e n s p l a y’ forms partial matches with four differentOld SP-patterns, including the ‘N Np Nr #Nr s #N’ SP-patternstanding in for the plural form of ‘kitten’.

• When compression of a body of information, I, is to beachieved via ICMUP, any repeating pattern that is to beunified should occur more often in I than one would expect bychance in a body of information of the same size as I that isentirely random.

• Compression can be optimised by giving shorter codes tochunks that occur frequently and longer codes to chunks thatare rare. This may be done using some such scheme asShannon-Fano-Elias coding, described in, for example, [8].

The SPMA Concept as a Variant of ICMUP (Section 5)

The SPMA structure in Figure 4 shows how the SPMA concept may be seen as a variant of ICMUP:

• There are partial matches between the New SP-pattern and OldSP-patterns as described above. There are also partial matchesbetween Old SP-patterns.

• The process of evaluating the SPMA concept in terms of ICmeans unifying all the matching SP-patterns so that the SPMAconcept is reduced to a single sequence:

‘S Num PL ; NP NPp D Dp 4 t w o #D N Np Nr 5 k i t t e n#Nr s #N #NP VP VPp Vr Vr 1 p l a y #Vr #Vr #VP #S’

• From this unification, the SPTI calculates the overallcompression, as described in [1,2]

How six other variants of ICMUP may be modelled via the SPMA concept (Section 6)

The six subsections that follow describe the other six variants of ICMUP and, for each one, describes how it may be modelled within the SPMA framework. All the examples are from the SPCM.

Basic ICMUP (Section 6.1)

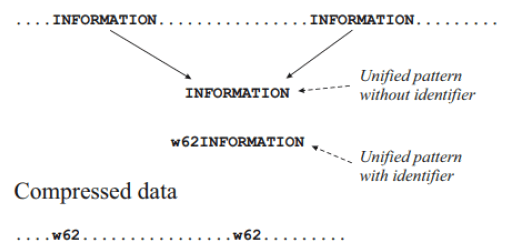

The simplest of the techniques to be described—referred to as Basic ICMUP—is to find two patterns that match each other within a given body of information, I, and then merge or ‘unify’ them so that the two instances are reduced to one.

This is illustrated in the upper part of Figure 5 where two instances of the pattern ‘INFORMATION’ near the top of the figure has been reduced to one instance, shown just above the middle of the figure. Here, and in subsections below, we shall assume that the single SP-pattern which is the product of unification is placed in some kind of dictionary of SP-patterns that is separate from I.

Figure 5: A schematic representation of the way two instances of the pattern ‘INFORMATION’ in a body of data may be unified to form a single ‘unified’ pattern, shown just above the middle of the figure. Section 6.2 describes how, to achieve lossless compression, the relatively short identifier ‘w62’ may be assigned to the unified pattern ‘INFORMATION’, as shown below the middle of the figure. At the bottom of the figure, the original data may be compressed by replacing each instance of ‘INFORMATION’ with a copy of the relatively short identifer, ‘w62’. Adapted from Figure 2.3 in [2].

The elements of Basic ICMUP can be seen in several parts of Figure 4. For example, ‘t w o’ in row 0 matches ‘D Dp 4 t w o #D’ in row 3. This means that, when all the rows in the SPMA concept are unified, those two SP-patterns will be unified. Likewise, for ‘k i t t e n’ in row 0 and ‘Nr 5 k i t t e n #Nr’ in row 1, and so on.

Chunking-with-Codes (Section 6.2)

A point that has been glossed over in describing Basic ICMUP is that, when a body of information, I, is to be compressed by unifying two or more instances of a pattern like ‘INFORMATION’, there is a loss of information about the location within I of each instance of the pattern ‘INFORMATION’. In other words, Basic ICMUP achieves ‘lossy’ compression of I.

This problem may be overcome with the Chunking-With-Codes variant of ICMUP:

• A unified pattern like ‘INFORMATION’, which is oftenreferred to as a ‘chunk’ of information, is stored in a dictionaryof patterns, as mentioned in Section 6.1.

• Now, the unified chunk is given a relatively short name,identifier, or ‘code’, like the ‘w62’ pattern appended at thefront of the ‘INFORMATION’ pattern, shown below themiddle of Figure 5.

• Then the ‘w62’ code is used as a shorthand which replaces the‘INFORMATION’ chunk of information wherever it occurswithin I. This is shown at the bottom of Figure 5.

• Since the code ‘w62’ is shorter than each instance of thepattern ‘INFORMATION’ which it replaces, the overall effectis to shorten I. But, unlike Basic ICMUP, Chunking-With-Codes may achieve ‘lossless’ compression of I because theoriginal information may be retrieved fully at any time.

• Details here are described in the three bullet points at the endof Section 4.

The concept of ‘chunk’ and the concept of ‘object’ (Section 6.2.1)

The concept of a ‘chunk’ of information, which became prominent following George Miller’s paper “The magical number seven, plus or minus two: some limits on our capacity for processing information”, is normally applied to anything that represents a relatively large amount of repeated infomation (redundancy) in the world via a favourable combination of frequency and size [9].

As such, there is a clear similarity between chunks such as words and chunks such as three-dimensional objects because they can both be seen to represent redundancies in the world in the form of recurrent bodies of information. But of course 3D objects are normally more complex than words.

In general, the concept of a chunk is of central importance in the Chunking-With-Codes variants of ICMUP (this section) and also in sections that follow: the Schema-Plus-Correction variant (Section 6.3), in the Run-Length Coding variant (Section 6.4), in the Class-Inclusion Hierarchies variant (Section 6.5), and in the Part-Whole Hierarchies variant (Section 6.6).

The expression of Chunking-With-Codes within the SPMA frame-work (Section 6.2.2)

Within Figure 4, the Chunking-With-Codes technique can be seen in the SP pattern ‘Nr 5 k i t t e n #Nr’, where the SP-symbols ‘Nr’, ‘5’, and ‘#Nr’, may be seen as codes for the SP-pattern ‘k i t t e n’. The reason that there are several code SP-symbols and not just one is to do with the way in which the SPMA concept is created and used. Several other examples may be seen in the same figure.

Schema-Plus-Correction (Section 6.3)

A variant of the Chunking-With-Codes version of ICMUP is called Schema-Plus-Correction. Here, the ‘schema’ is like a chunk of information which, as with Chunking-With-Codes, has a relatively short identifier or code that may be used to represent the chunk.

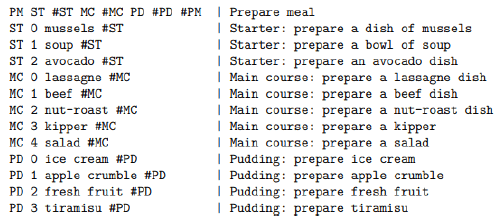

What is different about the Schema-Plus-Correction version of the chunk concept is that the schema may be modified or ‘corrected’ in various ways on different occasions. This is illustrated in an example with the SPMA concept in Figure 6, which shows a set of SP-patterns which describes, in a very simplified form, how a menu may be prepared in a cafe or restaurant.

Figure 6: A set of SP-patterns (an ‘SP-grammar’) comprising a set of SP-patterns representing, in a highly simplified form, the kinds of procedures involved in preparing a meal for a customer in a restaurant or cafe. To the right of each SP-pattern, after the character ‘|’, is an explanatory comment

The first SP-pattern in the figure, ‘PM ST #ST MC #MC PD #PD #PM’, may be seen as a schema for preparing a meal where the two SP-symbols ‘PM ... #PM’ may be seen as the identifier or code for the schema, and ‘PM’ is short for ‘prepare meal’.

In the same SP-pattern, the pair of SP-symbols ‘ST #ST’ serves as a slot where the starter may be specified, the pair of SP-symbols ‘MC #MC’ serves as a slot for the main course, and the pair of SP-symbols ‘PD #PD’ serves as a slot for the pudding. Each of the other SP-patterns in Figure 6 describes a dish, each one marked with SP-symbols as a starter, or a main course, or a pudding.

We can see how this works in Figure 7. This is the best SPMA created by the SP Computer Model with the New SP-pattern ‘PM 0 4 1 #PM’ and Old SP-patterns from Figure 6.

Unlike the SPMA concept in Figure 4, this SPMA is rotated by 90◦. The two arrangements are equivalent: the choice depends largely on what fits best on the page.

Here, we can see how the SP-symbol ‘0’ in column 0 has selected the starterdish ‘mussels’ (column 3), the SP-symbol ‘4’ in column 0 has selected the main course ‘salad’ (column 2), and the SP-symbol ‘1’ in column 0 has selected the pudding ‘apple crumble’ (column 4).

In short, the SP-pattern ‘PM ST #ST MC #MC PD #PD #PM’ in Figure 6 may be ‘corrected’ by the SP-pattern ‘PM 0 4 1 #PM’ to create the whole menu. In particular, the whole system allows any particular meal to be specified very economically with an SP-pattern like ‘PM 0 4 1 #PM’.

Run-Length Coding (Section 6.4)

A third variant, Run-Length Coding, may be used where there is a sequence of two or more copies of a pattern, each one except the first following immediately after its predecessor like this:

‘INFORMATIONINFORMATIONINFORMATIONINFORMATIONINFORMATION’.

In this case, the multiple copies may be reduced to one, as before, something like ‘INFORMATION×5’, where ‘×5’ shows how many repetitions there are; or something like ‘(INFORMATION*)’, where ‘(’ and ‘)’ mark the beginning and end of the pattern, and where ‘*’ signifies repetition (but without anything to say when the repetition stops).

In a similar way, a sports coach might specify exercises as something like ‘touch toes (×15), push-ups (×10), skipping (×30), ...’ or ‘Start running on the spot when I say “start” and keep going until I say “stop”’.

With the ‘running’ example, ‘start’ marks the beginning of the sequence, ‘keep going’ in the context of ‘running’ means ‘keep repeating the process of putting one foot in front of the other, in the manner of running’, and ‘stop’ marks the end of the repeating process. It is clearly much more economical to say ‘keep going’ than to constantly repeat the instruction to put one foot in front of the other.

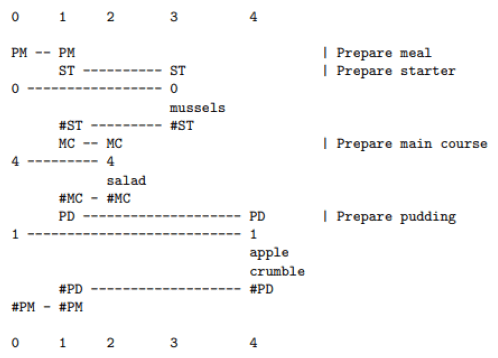

In the SPMA framework, the Run-Length Coding concept may be expressed via recursion, as shown in Figure 8. From top to bottom, this shows the sequence ‘procedure-A’, ‘procedure-B’, ‘procedure-C’, and ‘procedure-D’. But ‘procedure-B’ is repeated three times via the self-referential SP-pattern ‘ri ri1 ri #ri b #b #ri’ which appears in columns 5, 7, and 9.

This SP-pattern is self-referential because the pair of SP-symbols ‘ri ... #ri’ at the beginning and end of the SP-pattern matches the same pair of SP-symbols in the body of the SP-pattern: ‘ri #ri’ (Figure 8).

An important point here is that the recursive structure is only possible because the SPMA concept allows any given SP-pattern to appear two or more times in any one SPMA. In this case, the SP-patterns which, individually, appear more than once in the SPMA concept are the SP-patterns ‘ri ri1 ri #ri b #b #ri’ and ‘b b1 procedure-B #b’.

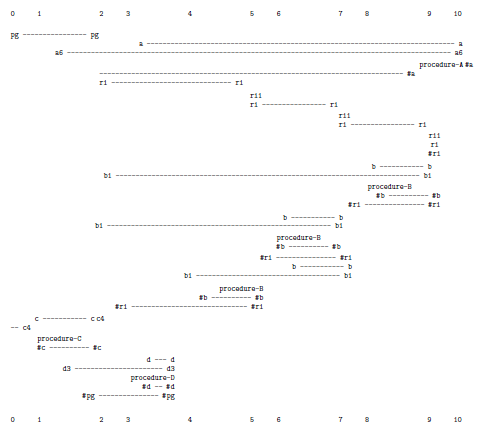

Class-Inclusion hierarchies (Section 6.5)

A widely-used idea in everyday thinking and elsewhere is the Class-Inclusion Hierarchy: the grouping of entities into classes, and the grouping of classes into higher-level classes, and so on, through as many levels as are needed.

This idea may achieve ICMUP because, at each level in the hierarchy, attributes may be recorded which apply to that level and all levels below it—so economies may be achieved because, for example, it is not necessary to record that cats have fur, dogs have fur, rabbits have fur, and so on. It is only necessary to record that mammals have fur and ensure that all lower-level classes and entities can ‘inherit’ that attribute.

In effect, multiple instances of the attribute ‘fur’ have been merged or unified to create that attribute for mammals, thus achieving compression of information. The concept of class-inclusion hierarchies with inheritance of attributes is quite fully developed in Object-Oriented Programming (OOP), which originated with the Simula programming language [10], is now widely adopted in modern programming languages, and is probably the inspiration for ‘Object-Oriented Design’ (OOD).

This idea may be generalised to cross-classification, where any one entity or class may belong in one or more higher-level classes that do not have the relationship superclass/subclass, one with another. For example, a given person may belong in the classes ‘woman’ and ‘doctor’ although ‘woman’ is not a subclass of ‘doctor’ and vice versa.

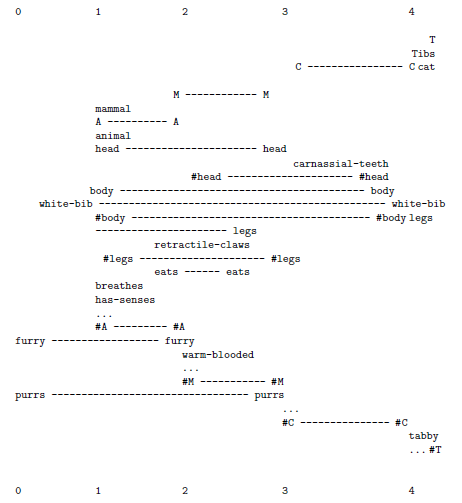

Figure 9 shows how the Class-Inclusion Hierarchy concept may be expressed within the SPMA framework. Here, a previously unknown entity with features shown in the New SP-pattern in column 1 may be recognised at several levels of abstraction: as an animal (column 1), as a mammal (column 2), as a cat (column 3) and as the specific cat ‘Tibs’ (column 4). These are the kinds of classes used in ordinary systems for OOD/OOP.

Figure 9: The best SPMA found by the SP model, with the New SP-pattern ‘white-bib eats furry purrs’ shown in column 1, and a set of Old SP-patterns representing different categories of animal and their attributes shown in columns 1 to 4. Reproduced from Figure 6.7 in [2].

From this SPMA, we can see how the entity that has now been recognised inherits unseen characteristics from each of the levels in the class hierarchy: as an animal (column 1) the creature ‘breathes’ and ‘has-senses’, as a mammal it is ‘warm-blooded’, as a cat it has ‘carnassial-teeth’ and ‘retractile-claws’, and as the individual cat Tibs it has a ‘white-bib’ and is ‘tabby’ (Figure 9).

Part-Whole hierarchies (Section 6.6)

Another widely-used idea is the Part-Whole Hierarchy in which a given entity or class of entities is divided into parts and sub-parts through as many levels as are needed. Here, ICMUP may be achieved because two or more parts of a class such as ‘car’ may share the overarching structure in which they all belong. So, for example, each wheel of a car, the doors of a car, the engine or a car, and so on, all belong in the same encompassing structure, ‘car’, and it is not necessary to repeat that enveloping structure for each individual part.

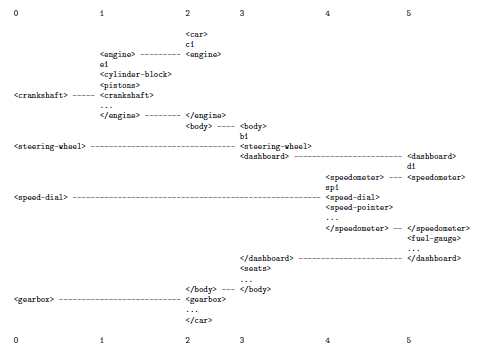

Figure 10 shows how a part-whole hierarchy may be accommodated in the SP system. Here, an SP-pattern representing the concept of a car is shown in column 2, with parts such as ‘<engine>’ ‘<body>’, and ‘<gearbox>’. The SP-pattern in column 1 shows parts of an engine such as ‘<cylinder-block>’, ‘<pistons>’, and ‘<crankshaft>’. The SP-pattern in column 3 shows how the body may be divided into such things as ‘<steering-wheel>’, ‘<dashboard>’, and ‘<seats>’. The SP-pattern in column 5 divides the dashboard into parts that include ‘<speedometer>’ and ‘<fuel-gauge>’. And the SP-pattern in column 4 divides the speedometer into ‘<speed-dial>’, ‘<speed-pointer>’, and more.

The kind of structure shown in Figure 10 exhibits a form of inheritance, much like inheritance in a class-inclusion hierarchy. In this case, recognition of something as a ‘<speed-dial>’ suggests that it is likely to be part of a ‘<dashboard>’, which itself is likely to be part of the ‘body’ of a ‘<car>’. This kind of inference is the kind of thing that crime investigators will do: search for a missing human body when a severed arm has been discovered.

Potential benefits of a new Mathematics as an Amalgamation of IC as a Foundation for Intelligence and IC as a Foundation for Mathematics (Section 7)

To provide some context for this paper, there appear to be potential benefits in the development of a New Mathematics as an amalgamation of the SPTI with mathematics—because IC is fundamental in the SPTI, and because of good evidence that IC is also fundamental in mathematic, described in [11].

That amalgamation, between mathematics and current or future versions of the SPTI means that the SPTI may gain from more than 2000 years of thinking about mathematics, and mathematics may gain additional techniques for IC, including the SPMA concept, unsupervised learning in mature versions of the SPTI, and potentially other techniques with further development of the SPTI.

Potential benefits of such a New Mathematics, drawing on those outlined in [11], are in summary:

• The New Mathematics would have all the strengths ofmathematics and all the strengths of the SPTI, summarisedin Appendix A.

• In addition, there is potential for a synergy betweenmathematics and the SPTI, meaning the potential for manyapplications beyond the sum of those encompassed bymathematics alone and the SPTI alone.

• With further development of unsupervised learning in theSPTI, there is likely to be potential for the automatic or semi-automatic development of scientific theories and for theintegration of theories that are currently not well integrated.

• Since the development of the SPTI has drawn quite heavilyon what is known about human learning, perception, andcognition, there is potential for the creation of systems for the representation and processing of knowledge that are abetter fit than ordinary mathematics for the way that people,including leading scientists, naturally think. For example:

o Carlo Rovelli writes that ‘Einstein had a uniquecapacity to imagine how the world might beconstructed, to ‘see’ it in his mind.’ [12].

o It appears that Michael Faraday developed his ideasabout electricity and magnetism with little or noknowledge of mathematics: ‘Without knowing mathematics, Faraday writes one of the best books of physics ever written, virtually devoid of equations. Hesees physics with his mind’s eye, and with his mind’seye creates worlds.’ [12].

o The famous Feynman diagrams invented by Nobel-prize-winning physicist Richard Feynman, outside therealms of conventional mathematics, provided and still provide a means of representing concepts in particlephysics which greatly simplify the associatedcalculations.

o Although John Venn acknowledged that he was notthe first to invent Venn diagrams [13], he gave themprominence, and his interest in them appears to reflecthis negative attitude to what were in his day theestablished approaches to mathematics and logic.

Conclusion (Section 7)

The main subject of this paper is the SP-Multiple-Alignment (SPMA) concept as an example of information compression via the matching and unification of patterns (ICMUP), and how it may be understood as a generalization of six other variants of ICMUP.

The SPMA concept has been shown in other publications to be a powerful vehicle for modelling diverse aspects of intelligence, for the representation and processing of diverse kinds of intelligence-related knowledge, and for the seamless integration of diverse aspects of intelligence and diverse kinds of intelligence-related knowledge, in any combination (strengths which are summarised in Appendix A).

Each of the six variants of ICMUP (additional to the SPMA concept) is described in a separate section, and in each case, there is a demonstration via the SP Computer Model (SPCM) of how that variant may be modelled within the SPMA framework.

The way in which the SPMA concept generalises six other variants of ICMUP seems to be largely responsible for the intelligence-related and other strengths of the SPMA concept.

As noted in Section 3.4, the SPMA concept may prove to be as significant for an understanding of ‘intelligence’ as is DNA for biological sciences. It may prove to be the ‘double helix’ of intelligence.

To provide some context for this paper:

• The six variants of ICMUP, for which the SPMA concept is ageneralisation, are the basis of arguments, that much ofmathematics, perhaps all of it, may be seen as a set oftechniques for compression of information, and theirapplication.

• Given the common ground between ‘IC as a foundation forintelligence’ and ‘IC as a foundation for mathematics’ there ispotential for the creation of a New Mathematics as anamalgamation of mathematics with current or future versionsof the SPTI, and the potential strengths of such a NewMathematics includes the potential for a synergy betweenmathematics and the SPTI, meaning the potential for manyapplications beyond the sum of those encompassed bymathematics alone and the SPTI alone.

Appendix (8)

Strengths of the spti (Appendix A)

This appendix summarises the broad strengths of the SPTI: section A.1 summarises the intelligence-related strengths of the SPTI; sectionA.4 summarises some other potential benefits and applications of theSPTI, less closely related to AI; and Appendix A.5 summarises theresults of a paper showing the potential of the SPTI to solve 20significant problems in AI research.

Summary of the intelligence-related strengths of the SPT (Appendix A.1)

The strengths of the SPTI in intelligence-related functions and otherattributes are summarised in this appendix. Further informationabout the intelligence-related strengths of the SPTI may be found in[1, 2] on the CognitionResearch.org website (accessed on 22November 2022), and in other sources referenced below.

Most of the intelligence-related capabilities described in this appendix are demonstrable with the SPCM. Exceptions to that rule are noted at appropriate points below. More detail about the several topics outlined below may be found in [1, 2].

• Compression and Decompression of Information. In view ofsubstantial evidence for the importance of IC in HLPC, ICshould be seen as an important feature of human intelligence. Paradoxical as this may seem, the SPCM provides fordecompression of information via the compression ofinformation [2].

• Natural Language Processing. Under the general heading of‘Natural Language Processing’ are capabilities that facilitate thelearning and use of natural languages. These include:

o The ability to structure syntactic and semanticknowledge into hierarchies of classes and sub-classes,and into parts and sub-parts.

oThe ability to integrate syntactic and semantic knowledge.

o The ability to encode discontinuous dependencies insyntax such as the number dependency (singular orplural) between the subject of a sentence and its mainverb, or gender dependencies (masculine or feminine)in French—where ‘discontinuous’ means that thedependencies can jump over arbitrarily largeintervening structures. Also important in thisconnection is that different kinds of dependency (e.g., number or gender) can co-exist without interferingwith each other.

o The ability to accommodate recursive structures insyntax.

o The production of natural language. A point ofinterest here is that the SPCM provides for theproduction of language as well as the analysis oflanguage, and it uses exactly the same processes to achieve IC in the two cases—in the same way that theSPCM uses exactly the same processes for both thecompression and decompression of information [2].

• Recognition and Retrieval. Capabilities that facilitate recognitionof entities or retrieval of information include:

o The ability to recognise something or retrieveinformation on the strength of a good partial matchbetween features as well as an exact match.

o Recognition or retrieval within a class-inclusionhierarchy with ‘inheritance’ of attributes, andrecognition or retrieval within an hierarchy of partsand sub-parts.

o‘Semantic’ kinds of information retrieval—retrievinginformation via ‘meanings’.

o Computer vision [12], including visual learning of 3Dstructures [4].

• Several Kinds of Probabilistic Reasoning. The capabilities of theSPTI in probabilistic reasoning are described most fully in [2] and more briefly in [1]. The core idea is that any Old SP-symbolin an SPMA that is not aligned with a New SP-symbolrepresents an inference that may be drawn from the SPMA concept.

The SPTI capabilities are:

• One-Step ‘Deductive’ Reasoning. A simple example of modusponens syllogistic reasoning goes like this:

➢ If something is a bird then it can fly.

➢ Tweety is a bird.

➢ Therefore, Tweety can fly

•Abductive Reasoning. Abductive reasoning is moreobviously probabilistic than deductive reasoning [14].

One morning you enter the kitchen to find a plate and cup on the table, with breadcrumbs and a pat of butter on it, and surrounded by a jar of jam, a pack of sugar, and an empty carton of milk. You conclude that one of your house-mates got up at night to make him- or herself a midnight snack and was too tired to clear the table.

In short, abductive reasoning seeks the simplest and most likely conclusion from a set of observation

o Probabilistic Networks and Trees. One of the simplest kinds ofsystem that supports reasoning in more than one step (aswell as one-step reasoning) is a ‘decision network’ or a’decision tree’. In such a system, a path is traced throughthe network or tree from a start node to two or morealternative destination nodes depending on the answers tomultiple-choice questions at intermediate nodes. Any suchnetwork or tree may be given a probabilistic dimension byattaching a value for probability or frequency to each of thealternative answers to questions at the intermediate nodes.

o Reasoning With ‘Rules’. SP-patterns may serve very wellwithin the SPCM for the expression of such probabilisticregularities as ‘sunshine with broken glass may create fire’,‘matches create fire’, and the like. Alongside otherinformation, rules like those may help determine one ormore of the more likely scenarios leading to the burningdown of a building, or a forest fire.

o Nonmonotonic Reasoning. An example showing how theSPTI can perform nonmonotonic reasoning is described in[2, 1]. The conclusion that ‘Socrates is mortal’, deducedfrom ‘All humans are mortal’ and ‘Socrates is human’remains true for all time, regardless of anything we learnlater. By contrast, the inference that ‘Tweety can probablyfly’ from the propositions that ‘Most birds fly’ and ‘Tweetyis a bird’ is nonmonotonic because it may be changed if,for example, we learn that Tweety is a penguin.

o ‘Explaining Away’. This means ‘If A implies B, C implies B,and B is true, then finding that C is true makes A lesscredible.’ In other words, finding a second explanation foran item of data makes the first explanation less credible.

o There is also potential in the system for Spatial Reasoning. Thepotential is described in [5].

What-If Reasoning. The potential is described in [5].

• Planning and Problem Solving. Capabilities here include:

o The ability to plan a route, such as for example a flying route between cities A and B, given information about direct flights between pairs of cities including thosethat may be assembled into a route between A and B.

o The ability to solve geometric analogy problems, oranalogues in textual form.

• Unsupervised Learning. It describes how the SPCM may achieveunsupervised learning from a body of ‘raw’ data, I, to create anSP-grammar, G, and an Encoding of I in terms of G, where theencoding may be referred to as E [2]. At present the learningprocess has shortcomings summarised in [1] but it appears thatthese problems may be overcome. In its essentials, unsupervised learning in the SPCM means searching for one ormore ‘good’ SP-grammars, where a good SP-grammar is a set of SP-patterns which is relatively effective in the economicalencoding of I via SP-Multiple-Alignment. This kind of learningincludes the discovery of segmental structures in data(including hierarchies of segments and subsegments) and thelearning classes (including hierarchies of classes and subclasses).

The representation and processing of several kinds of intelligence-related knowledge (Appendix A.2)

Although SP-patterns are not very expressive in themselves, they come to life in the SPMA framework within the SPCM. Within the SPMA framework, they provide relevant knowledge for each aspect of intelligence mentioned in Appendix A.1.

More specifically, they may serve in the representation and processing of such things as: the syntax of natural languages; Class-Inclusion Hierarchies (with or without cross classification); Part-Whole Hierarchies; discrimination networks and trees; if-then rules; entity-relationship structures [15]; relational tuples [15] and concepts in mathematics, logic, and computing, such as ‘function’, ‘variable’, ‘value’, ‘set’, and ‘type definition’ [2, 16, 17].

As noted in Section 2, the addition of two-dimensional SP patterns to the SPCM is likely to expand the capabilities of the SPTI to include the representation and processing of structures in two-dimensions and three-dimensions, and the representation of procedural knowledge with parallel processing.

The seamless integration of diverse aspects of intelligence, and diverse kinds of knowledge, in any combination (Appendix A.3)

An important additional feature of the SPCM, alongside its versatility in aspects of intelligence and diverse forms of reasoning, and its versatility in the representation and processing of diverse kinds of knowledge, is that there is clear potential for the SPTI to provide for the seamless integration of diverse aspects of intelligence and diverse forms of knowledge, in any combination. This is because those several aspects of intelligence and several kinds of knowledge all flow from a single coherent framework: SP-patterns and SP-symbols Section 2, and the SPMA concept (Section 3).

It appears that this kind of seamless integration is essential in any artificial system that aspires to AGI.

Figure 11 shows schematically how the SPTI, with the SPMA at centre stage, exhibits versatility and seamless integration.

Some of the potential benefits and applications of the SPTI (Appendix A.4)

Here’s a summary of peer-reviewed papers that have been published about potential benefits and applications of the SPTI:

• Overview. The SPTI promises deeper insights and bettersolutions in several areas of application including:unsupervised learning, natural language processing,autonomous robots, computer vision, intelligent databases, software engineering, information compression, medicaldiagnosis and big data. There is also potential in areas such asthe semantic web, bioinformatics, structuring of documents, the detection of computer viruses, data fusion, new kinds ofcomputer, and the development of scientific theories. Thetheory promises seamless integration of structures andfunctions within and between different areas of application[16].

• The Development of Intelligence in Autonomous Robots. The SPTIopens up a radically new approach to the development ofintelligence in autonomous robots [5].

• The Management of Big Data. There is potential in the SPTI forthe management of big data in the following areas: overcomingthe problem of variety in big data; the unsupervised learning ordiscovery of ’natural’ structures in big data; the interpretationof big data via pattern recognition, parsing and more; assimilating information as it is received, much as people do; making big data smaller; economies in the transmission ofdata; and more [18].

• Commonsense Reasoning and Commonsense Knowledge. Largelybecause of research by Ernest Davis and Gary Marcus (see, forexample, [19]), the challenges in this area of AI research arenow better known. Preliminary work shows that the SPTI has promise in this area [20].

• An Intelligent Database System. The SPTI has potential in thedevelopment of an intelligent database system with severaladvantages compared with traditional database systems [15].

• Medical Diagnosis. The SPTI may serve as a vehicle for medicalknowledge and to assist practitioners in medical diagnosis, withpotential for the automatic or semi-automatic learning of newknowledge [21].

• Natural Language Processing. The SPTI has strengths in theprocessing of natural language [1, 2].

• Sustainability. The SP Machine (Section 2.3), has the potentialto reduce demands for energy from IT, especially in AIapplications and in the processing of big data, in addition toreductions in CO2 emissions when the energy comes from theburning of fossil fuels. There are several other possibilities forpromoting sustainability [22].

• Transparency. The SPTI with the SPCM provides completetransparency in the way knowledge is structured and in the wayknowledge is processed [23].

• Vision, Both Artificial and Natural. The SPTI opens up a newapproach to the development of computer vision and itsintegration with other aspects of intelligence, and it throwslight on several aspects of natural vision [4, 24]

The clear potential of the SPTI to solve 20 significant problems in AI research (Appendix A.5)

Strong evidence in support of the SPTI has arisen, indirectly, from the book Architects of Intelligence by science writer Martin Ford [25]. To prepare for the book, he interviewed several influential experts in AI to hear their views about AI research, including opportunities and problems in the field. He writes [25]:

The purpose of this book is to illuminate the field of artificial intelligence—as well as the opportunities and risks associated with it— by having a series of deep, wide-ranging conversations with some of the world’s most prominent AI research scientists and entrepreneurs.

In the book, Ford reports what the AI experts say, giving them the opportunity to correct errors he may have made so that the text is a reliable description of their thinking.

This source of information has proved to be very useful in defining problems in AI research that influential experts in AI deem to be significant. This has been important from the SP perspective because, with 17 of those problems and three others—20 in all—there is clear potential for the SPTI to provide a solution.

Since these are problems with broad significance, not micro-problems of little consequence, the clear potential of the SPTI to solve them is a major result from the SP programme of research, demonstrating some of the power of the SPTI.

The peer-reviewed paper describes those 20 significant problems, how the SPTI may solve them, with pointers to where fuller information may be found [26]. The following summary describes each of the problems briefly with a summary of how the SPTI may solve it:

1.The Symbolic Versus Sub-Symbolic Divide. The need to bridgethe divide between symbolic and sub-symbolic kinds ofknowledge and processing [26]. The concept of an SP-symbol can represent a relatively large symbolic kind ofthing such as a word or a relatively fine-grained kind ofthing such as a pixel.

2. Errors in Recognition. The tendency of DNNs to make largeand unexpected errors in recognition [26]. The overallworkings of the SPTI and its ability to correct errors indata [1] suggests that it is unlikely to suffer from thesekinds of error.

3. Natural Languages. The need to strengthen therepresentation and processing of natural languages,including the understanding of natural languages and theproduction of natural language from meanings [26]. TheSPTI has potential in the representation and processing ofseveral aspects of natural language.

4. Unsupervised Learning. Overcoming the challenges ofunsupervised learning. Although DNNs can be used inunsupervised mode, they seem to lend themselves best tothe supervised learning of tagged examples [26]. Learningin the SPTI is entirely unsupervised. It is clear that mosthuman learning, including the learning of our firstlanguage or languages [27], is achieved via unsupervisedlearning, without needing tagged examples, orreinforcement learning, or a ‘teacher’, or other form ofassistance in learning [28]. Incidentally, a workinghypothesis in the SP programme of research is thatunsupervised learning can be the foundation for all otherforms of learning, including learning by imitation, learningby being told, learning with rewards and punishments, andso on.

5. Generalisation. The need for a coherent account ofgeneralisation, undergeneralisation (over-fitting), and over-generalisation (under-fitting) [26]. Although this is notmentioned in Ford’s book [25], there is the relatedproblem of reducing or eliminating the corrupting effect oferrors in the data which is the basis of learning. The SPTIprovides a coherent account of generalisation, and thecorrection of over- and under-generalisations, and avoidingthe corrupting effect of errors in data.

6. One-Shot Learning. Unlike people, DNNs are ill-suited tothe learning of usable knowledge from one exposure orexperience [26]. The ability to learn usable knowledge froma single exposure or experience is an integral andimportant part of the SPTI.

7. Transfer Learning. Although transfer learning—incorporating old learning in newer learning—can be doneto some extent with DNNs [29], DNNs fail to capture thefundamental importance of transfer learning for people, orthe central importance of transfer learning in the SPCM[26]. Transfer learning is an integral and important part ofhow the SPTI works.

8. Reducing Computational Demands. How to increase thespeed of learning by DNNs, and how to reduce thedemands of DNNs for large volumes of data, and for largecomputational resources [26]. The ability of the SPTI tolearn from a single exposure or experience, and the factthat transfer learning is an integral part of how it works, islikely to mean greatly reduced computational demands ofthe SPTI.

9.Transparency. The need for transparency in therepresentation and processing of knowledge [26]. Bycontrast with DNNs, which are opaque in how theyrepresent knowledge, and how they process it, the SPTI isentirely transparent in both the representation andprocessing of knowledge.

10. Probabilistic Reasoning. How to achieve probabilisticreasoning that integrates with other aspects of intelligence[26]. The SPTI is entirely probabilistic in all its inferences,including the forms of reasoning described in [2].

11. Commonsense Reasoning an Commonsense Knowledge. Unlikeprobabilistic reasoning, the area of commonsensereasoning and commonsense knowledge is surprisinglychallenging [26]. With qualifications, the SPTI shows somepromise in this area [30, 31].

12. How to Minimise the Risk of Accidents with Self-DrivingVehicles. Not with standing the hype about self-drivingvehicles, there are still significant problems in minimisingthe risk of accidents with such vehicles [26]. The SPTI haspotential in this area [32].

13. Compositionality in the Representation of Knowledge. DNNsare not well suited to the representation of Part-WholeHierarchies or Class-Inclusion Hierarchies in knowledge[26]. By contrast, the SPTI has robust capabilities in thisarea.

14. Establishing the Importance of Information Compression in AI Research. There is a need to spread the word about thesignificance of IC in both HLPC and AI [26]. Theimportance of IC in HLPC is described in [33] and itsimportance in the SPTI is described in this and most otherpublications about the SPTI.

15. Establishing the Importance of a Biological Perspective in AI Research. There is a need to raise awareness of the importance of a biological perspective in AI research [26]. This is very much part of the SPTI research and publicity for that research.

16. Distributed Versus Localist Representations for Knowledge. Apersistent issue in studies of HLPC and in AI is whetherknowledge in brains is represented in distributed orlocalist form, and which of those two forms works best inAI systems [26]. DNNs employ a distributed form forknowledge, but the SPTI, which is firmly in the localistcamp, has distinct advantages compared with DNNs. Thisreinforces other evidence for localist representations inbrains.

17. The Learning of Structures From Raw Data. DNNs are weakin the learning of structures from raw data [26]. Bycontrast, this is a clear advantage in the workings of theSPTI.

18. The Need to Encourage Top-Down Strategies in AI Research. Most AI research has adopted a bottom-up strategy, butthis is failing to deliver generality in solutions [26]. In thequest for AGI, there are clear advantages in adopting a top-down strategy [3].

19. Overcoming the Limited Scope for Adaptation in Deep Neural Networks. An apparent problem with DNNs is that, unlessmany DNNs are joined together, each one is designed tolearn only one concept, and the learning is restricted towhat can be done with a fixed set of layers [26]. Bycontrast, the SPTI, like people, can learn multipleconcepts, and these multiple concepts are often inhierarchies of classes or in Part-Whole Hierarchies. Thisadaptability is largely because, via the SPMA concept,many different SP-Multiple-Alignments may be created inresponse to one body of data.

20. The Problem of Catastrophic Forgetting. Although there aresomewhat clumsy workarounds for this problem, anordinary DNN is prone to the problem of catastrophicforgetting, meaning that new learning wipes out oldlearning [26]. There is no such problem with the SPTI which may store new learning independently with oldlearning, or form composite structures which preserve bothold and new learning, in the manner of transfer learning(above).

Abbreviations (Section 9)

Abbreviations used in this tutorial are detailed here.

AI Artificial Intelligence

IC Information Compression

ICMUP Information Compression via the Matching and Unification of Patterns

SPCM SP-Computer Model

SPMA SP-Multiple-Alignment

SPTI SP-Theory of Intelligence

Acknowledgements (Section 10)

I’m grateful for constructive comments on this research by many people.

Software Availability (Section 11)

The software for the SP Computer Model is available via links under the heading “SOURCE CODE” on this web page: tinyurl.com/3myvk878. The software is also available as ‘SP71’, under ‘Gerry Wolff’, in Code Ocean (codeocean.com/dashboard).

References (Section 12)

- Wolff JG. The SP Theory of Intelligence: an overview. Information. 2013:4(3);283-341.

- Wolff JG. Unifying Computing and Cognition: the SP Theory and Its Applications. Menai Bridge. 2006.

- Wolff JG. Intelligence Via Compression of Information. 2023.

- Wolff JG. Application of the SP Theory of Intelligence to the understanding of natural vision and the development of computer vision. SpringerPlus. 2014:3(1);552-70.

- Wolff JG. Autonomous robots and the SP Theory of Intelligence. IEEE Access. 2014:2;1629-51.

- Wolff JG. Information compression, multiple alignment, and the representation and processing of knowledge in the brain. Front. Psychol. 2016:7(1584).

- Palade V, Wolff JG. A roadmap for the development of the ‘SP Machine’ for artificial intelligence. Comput J. 2019:62;1584-604.

- Cover TM, Thomas JA. Elements of Information Theory. John Wiley Sons. 2006.

- Miller GA. The magical number seven, plus or minus two: some limits on our capacity for processing information. Psychol Rev. 1956:63;81-97.

- Birtwistle GM, Dahl OJ, Myhrhaug B, et al. Simula Begin. Stud litt. 1973.

- Wolff JG. Mathematics as information compression via the matching and unification of patterns. Complexity. 2019:25.

- Rovelli C. Reality Is Not What It Seems: The Journey to Quantum Gravity. Penguin Books. 2016.

[Google Scholar] [Crossref]

- Edwards AW. Cogwheels of the Mind: The Story of Venn Diagrams. Johns Hopkins Univ Press. 2004.

- Douven I. Abduction. In E N Zalta. 2011.

- Wolff JG. Towards an intelligent database system founded on the SP Theory of Computing and Cognition. Data Knowl Eng. 2007:60;596-624. 2007.

- Wolff JG. The SP Theory of Intelligence: benefits and applications. Information. 2014:5(1);1-27.

- Wolff JG. Software engineering and the SP Theory of Intelligence. 2017.

- Wolff JG. Big data and the SP Theory of Intelligence. IEEE Access. 2014:2;301-15.

- Davis E, Marcus G. Commonsense reasoning and commonsense knowledge in artificial intelligence. Commun ACM. 2015:58(9);92-103.

- Wolff JG. Commonsense reasoning, commonsense knowledge, and the SP Theory of Intelligence. 2019.

- Wolff JG. Medical diagnosis as pattern recognition in a framework of information compression by multiple alignment, unification and search. Decis Support Syst. 2006:42;608-25.

- Wolff JG. How the SP System may promote sustainability in energy consumption in IT systems. Sustainability. 2021:13(8);1-21.

- Wolff JG. Transparency and granularity in the SP Theory of Intelligence and its realisation in the SP Computer Model. Interpret Artif Intell: Perspect Granul Comput. 2021:187-216.

- Wolff JG. The potential of the SP System in machine learning and data analysis for image processing. Big Data Cogn Comput. 2021:5(1);7.

- Ford M. Architects of Intelligence: the Truth About AI From the People Building It. Packt Publ. 2018.

- Wolff JG. Twenty significant problems in AI research, with potential solutions via the SP Theory of Intelligence and its realisation in the SP Computer Model. Foundations. 2022:2;1045-79.

- Wolff JG. Learning syntax and meanings through optimization and distributional analysis. In Categ Process Lang Acquis. 1988:179-215.

- Gold EM. Language identification in the limit. Inf Control. 1976:10;447-74.

- Schmidhuber J. One big net for everything. 2018.

- Wolff JG. The SP Theory of Intelligence, and its realisation in the SP Computer Model as a foundation for the development of artificial general intelligence. Analytics. 2023:2(1);163-92.

- Wolff JG. Interpreting Winograd Schemas via the SP Theory of Intelligence and its realisation in the SP Computer Model. 2018.

- Wolff JG. A proposed solution to problems in learning the knowledge needed by self-driving vehicles. 2021.

- Wolff JG. Information compression as a unifying principle in human learning, perception, and cognition. Complexity. 2019:38.